{'image': tensor([[[0.3059, 0.3059, 0.3098, ..., 0.3765, 0.3686, 0.3647],

[0.3020, 0.3020, 0.3098, ..., 0.3804, 0.3725, 0.3686],

[0.2941, 0.2980, 0.3059, ..., 0.3804, 0.3686, 0.3569],

...,

[0.4431, 0.4431, 0.4471, ..., 0.3373, 0.3333, 0.3333],

[0.4431, 0.4431, 0.4471, ..., 0.3373, 0.3333, 0.3294],

[0.4392, 0.4431, 0.4431, ..., 0.3412, 0.3333, 0.3294]],

[[0.3490, 0.3490, 0.3529, ..., 0.4275, 0.4196, 0.4157],

[0.3451, 0.3451, 0.3529, ..., 0.4314, 0.4235, 0.4196],

[0.3333, 0.3373, 0.3451, ..., 0.4314, 0.4196, 0.4078],

...,

[0.4941, 0.4941, 0.4980, ..., 0.3569, 0.3529, 0.3529],

[0.4941, 0.4941, 0.4980, ..., 0.3569, 0.3529, 0.3490],

[0.4902, 0.4941, 0.4941, ..., 0.3608, 0.3529, 0.3490]],

[[0.1922, 0.1922, 0.1961, ..., 0.3098, 0.3098, 0.3059],

[0.1882, 0.1882, 0.1961, ..., 0.3137, 0.3137, 0.3098],

[0.1882, 0.1922, 0.2000, ..., 0.3137, 0.3098, 0.2980],

...,

[0.4667, 0.4588, 0.4627, ..., 0.2314, 0.2275, 0.2275],

[0.4667, 0.4588, 0.4627, ..., 0.2314, 0.2275, 0.2235],

[0.4627, 0.4588, 0.4588, ..., 0.2353, 0.2275, 0.2235]]]),

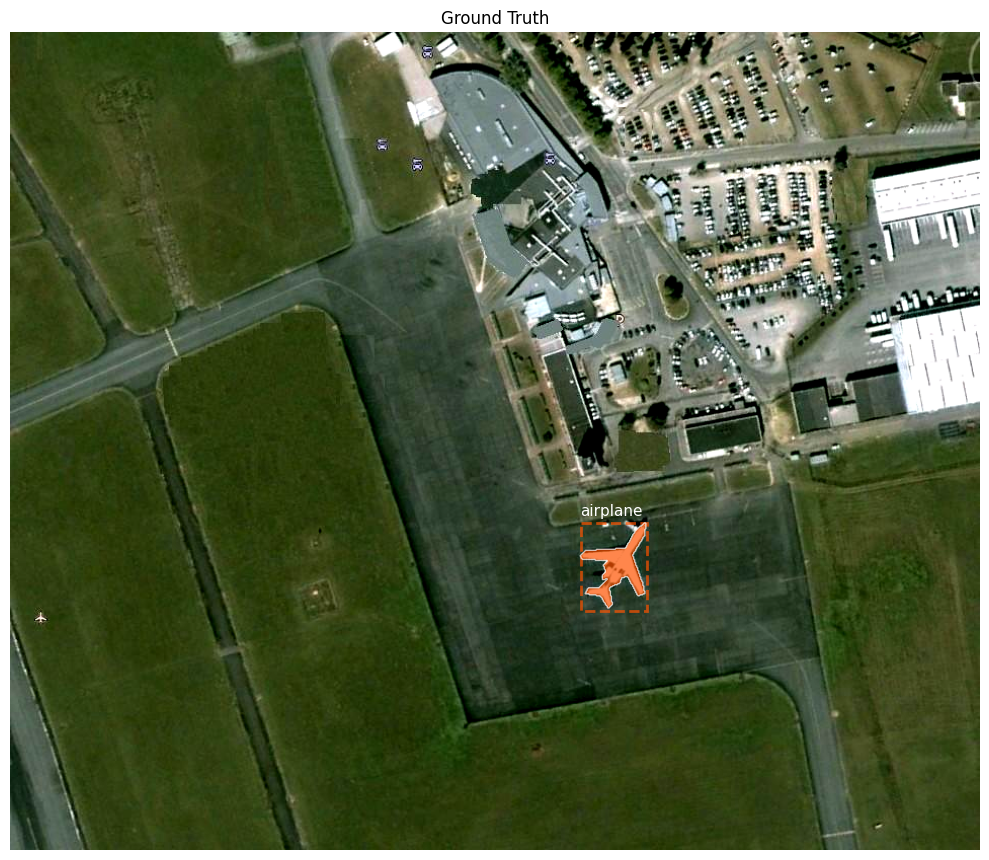

'boxes': tensor([[563., 485., 629., 571.]]),

'labels': tensor([1]),

'masks': tensor([[[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]]], dtype=torch.uint8),

'prediction_boxes': tensor([[559.5670, 481.6908, 630.8933, 570.8891]]),

'prediction_labels': tensor([1]),

'prediction_scores': tensor([0.9885]),

'prediction_masks': tensor([[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]])}